State of the Union 2024: A Crisis of Crowding

- E Chan

- Mar 31, 2024

- 28 min read

After a disastrous 2022 year where companies laid off nearly the same number of people they have hired during the pandemic, 2023 shaped up to be one of the most interesting recovering markets in tech.

While most people speculated that the job market would return to its frothy state, it soon became clear that was not the case. With the demise of Silicon Valley Bank, startups and VCs may not get access to the same credit and financial support that has helped startups grow for the past 40 or so years. Even though companies began hiring again, the number of employees actually hired has not changed much. Worse, the sheer difficulty of getting hired has increased.

In my opinion, the bar for getting hired has finally returned to acceptable standards. The only way to get a job in this current market is to ace the interview. Completely. Strategies that relied on luck such as spamming Leetcode questions went from being inefficient to almost completely ineffective. Companies have started to realize they do not need to spend so much on employees and are looking to cut costs anywhere and everywhere they can despite the record year for big tech’s stock prices.

Because of this, we are finally seeing the hangover of the cultural zeitgeist around tech interviews and engineering prowess. For the past decade, people got drunk on the idea of easily coasting into a 6 figure FAANG job and resting on their laurels. Now, people are realizing they have wasted their time coasting, destroyed their careers in the process, and that getting a job is no longer as easy as it once was.

As the AI hype consumes the market, everyone has been claiming it as the engineer job killer. However, I believe the usefulness of AI has been vastly overstated and strong engineers will still be in demand because AI cannot handle the complexity of modern day codebases. AI however, will be a bigger competitor on the lower end of the engineering skill spectrum.

But the even bigger crisis that few have noticed is that the industry is beginning to suffer a crisis of crowding. More and more engineers are competing for fewer and fewer jobs and will receive less compensation for the same jobs than people of the last decade.

The AI situation is not helping. If you are anywhere near mediocre, you are on the way out.

My Results

Firstly, let’s cover the state of my business. I have decided to no longer upload Youtube videos for a few reasons. There is the issue of my presence. I am not very comfortable with fame on any level. I simply prefer to get results and let my work speak for itself. I am just a conduit for it.

My work has gotten attention but that also made me far more cautious. My words are being parsed and everything I say is being analyzed by people and companies. This is already driving me nuts and is making me paranoid. And this is just with a meager following on Youtube.

However, I am still working with clients. I elected to retain my clients from last year that have not gotten a job. But because of the state of the job market (for reasons I will explain later), it has become increasingly difficult to even get interviews above the L4 level, let alone ace them.

This year I was only able to secure 1 L4 client 2 interviews for the L5 level, both of which were failures. Let’s dive into the postmortem for this result.

Firstly, I only had 6-8 weeks from the time I was notified to interview day. Because of the job market, I had not contacted these clients and thought it best for them to take their time with the job hunt. However, in this particular case, this was not enough time and the client was on the verge of burnout trying to study and get these interviews. I should have pushed for him to do the interviews at a later date given the burnout state.

Second, there was a heavy emphasis on system design that I could not fill. The client had not worked on systems at scale and therefore, it was a challenge to try and convey how to think about systems to a proficient degree. My guide is sufficient to get people to the L4 level of system design and for those who have seen it before, the L5 level because they are able to fill in the gaps of the structure. However, I have long struggled to fill this gap in for my clients and I am still working on that to this day.

The biggest crux of the issue was being able to design the system right the first time with robustness and stability already baked into the design instead of having to go back and revise the system for that robustness lead to inefficiency in the answer. That tripped us in one interview. The other was a coding interview question where too much time was spent on the first part of the question and there wasn’t enough time for the followup.

That is not an excuse though. As a coach, it is my responsibility to ensure that my clients get the results. I am falling short in this area. To date, I have written a guide and a few exercises myself but I am still testing this out. I plan to release those for free later on this year.

In short, the loss was deserved and reflects a weakness in my teaching. No more, no less.

That said, I have been reluctant to take on new clients. Not just because of my losses this year but also because there have not been any candidates I feel that I could give value add to nor anyone I believed in.

Let me shed some light on who I choose to take on as a client. There are only a few criteria I use:

Is this client able to put in the work needed?

Can I trust this client?

Can I add value to this client that is worth my fee?

It's a rather simple criteria but most people fail one of these three. I have talked at length about the need to git gud and most people are not willing to put in the time and focus necessary to improve the right way. And if they are not the honest type, I cannot trust that whatever they report back is right and I cannot trust that they will hold themselves properly accountable. Worse, they may make collecting compensation a huge headache.

As Buffett says:

We look for intelligence, we look for initiative or energy, and we look for integrity. And if they don't have the latter, the first two will kill you, because if you're going to get someone without integrity, you want them lazy and dumb.

More interestingly, because of the layoffs of 2022, I had several people approach me. However, I felt they were sufficiently talented enough that I felt they did not need my help. They just needed to fix 1 or 2 small errors and they could get an offer, even in a restrictive job market. Perhaps this was just a reflection of their fear about the job market.

Was it free money? Yes. Would it be an immoral way of making money? Yes.

I won’t charge people for a service that they do not need. I am offering a valuable service. If I cannot add value to their lives with that service, I have no business making them buy it.

Going forward, my goal is to improve my ability to teach system design in a manner that even someone with no exposure to scale can understand and execute efficiently on. This is a very difficult task and I don’t believe it has been sufficiently tackled. The information is out there certainly. But getting people to execute on it consistently has not been handled.

I will elaborate on this in a future post. The conundrum of teaching system design deserves its own discussion. For now, I would generally like to reserve the discussion for what is happening in the tech industry and how it impacts engineers.

Layoffs: Is It Really About Taxes?

First, let us talk about the continued layoffs in 2023.

Most people blame the Section 174 changes that capitalizes and amortizes R&D rather than expensing it. Expensing means that you can deduct the full cost up front against taxable income. Capitalizing and amortizing means you can deduct the cost over 5 years. The argument is that because they cannot get the full tax deduction, more taxes must be paid now and engineers must be laid off.

This may explain one leg of the problem but I don’t think it's the entire story.

First, the biggest provision of the 2017 Tax Cut and Jobs Act (TCJA) that introduced the R&D changes also reduced the top tax rate from 37% to 21%. FAANG as a whole reaped massive benefits, decreasing their effective tax rate from 25% to 15%. This contributed to about a 7% earnings per share growth the year the TJCA was enacted and about 3% per year after.

Great news if you were a shareholder. But going to a maximum 21% tax rate before deductions when you were paying 25% after deductions before is questionable grounds for firing people. Sure you may have hired more engineers and that will eat into your costs but the tax code changes all the time. If companies hired and fired every time there was a change to the tax code, there would be a lot of employee turnover. Hiring is done based on what a team and product needs, not on the taxable bill. It is absurd to pass on a good hire simply because of a tax bill that can change any year.

The weakness to this point is that companies have hired people ever since the tax rate changes and that the firings must be proportionate to that amount. It makes sense but the friction and penalty to fire half your staff, along with the massive damage to a company’s reputation, is most likely not worth it. CEOs and managers are not as callous as the corporate stereotypes make them out to be.

Second, this change only affects midsized companies theoretically. Startups would not reap the tax change benefits since the expenses and charges are against profits. Most startups are profit losers and cash flow negative. We should also not really expect large tech companies to be too bothered or, at least, not lay off people any more than the midsized or the cash floor poor companies. They are enormously profitable and will reap the tax benefits of the depreciation over a longer time period instead of up front. The entire benefit value will be realized eventually so it won’t make a lot of difference to them, albeit it will yield a smaller net present value.

This leads me to the third point. If you assumed that companies were firing because of the tax code change, the expected results are not in line with observable reality. Layoffs aren’t only excluded to the tech sector and the tech companies that should be firing engineers aren’t.

I want to illustrate that layoffs aren’t singled out to tech companies that are impacted by the R&D change. Layoffs are occurring across the board, across the economy, and across every job. Layoffs aren’t just happening at cash strapped unprofitable companies. Nor are companies only firing engineers.

Even very profitable companies like Meta and Google and even non-tech companies like Geico and even big banks cut 60,000 jobs.

Let’s look at some layoff numbers by the largest layoffs across all sectors.

Notice that the layoffs have hit not just tech companies but consulting, banking, delivery, and even trucking. This graphic is a bit misleading. British Telecom (BT) announced that it would lay off 55,000 by 2030 so not all layoffs occurred in 2023. You can also argue that Yellow’s bankruptcy is an outlier as well.

However, looking at the rest of the group, based on the source, it appears that most of the layoffs are mostly non-engineers. Google’s 30,000 layoff was for AI replacing sales. Accenture is a services and consulting company that were laying people off because of a reduction in billable hours because of macroeconomic conditions. Amazon’s cuts appear to be due to the same line of thinking, mostly focused on human resources and stores division.

To emphasize this point, let’s look at a few notable companies not on the list that are not tech companies that have made some rather drastic cuts.

Let’s take Geico, an insurance company, for instance. Geico laid off 6% of its workforce to “become more dynamic, agile, and streamline our processes.” Translation: management cuts. Here is the employee count. I very much doubt that most of Geico’s employees are engineers.

Or Bank of America, where the CEO says that the employees are being managed out and they have shed 3% of their workforce. The analysis seems to conclude that the biggest cuts are in retail banking and trading yet has picked up hiring for trading in Europe.

Again, I don’t think Bank of America is firing all their engineers.

So non-tech companies are doing some extreme cuts. But that doesn’t necessarily exclude tech companies. Perhaps the tech companies are concerned with the tax changes and the non-tech companies are concerned with something else?

Let’s look at cash flow starved companies, the unicorns with recent IPOs. These are the biggest companies that are under threat from the changes. After all, without the ability to expense, they will need to pay more in cash for taxes and have every incentive to fire engineers. If they are cash flow starved, they need every dollar they can keep.

Let’s look at free cash flow margins. That is, what is the ratio of revenues to the cash that hits their books after all expenses, dividends, financing, and reinvestments are made? This will tell us how much cash a business has to play with after all expenses are paid to keep the business going and the investors happy. The thicker the margins, the more money they have to pay taxes.

At the end of 2022, here’s where some of these tech companies stood. By no means is this list comprehensive. But it should give you a sense of what it all means.

Some of you may need to understand what these terms mean.

Operating income is the profits a company makes from its operations. These will be after your business expenses like advertising and paying staff. This may also subtract non-cash expenses like depreciation and amortization, stock based compensation, etc.

Free cash flow is the amount of cash that a company gets after collecting the profits, paying expenses, reinvesting in the company, paying off debts, dividends, and stock buybacks.

Operating cash flow is the amount of cash that a company gets very specifically only for operation related purposes. This does not include the self-reinvesting or financing. This differs from operating income because this will exclude the non cash expenses like depreciation and amortization, inventory gains and losses, bills paid and owed to external vendors, stock based compensation, and so on.

Overall, I want to paint a picture of how much wriggle room a company has and how impacted its operations really are by tax changes.

Note that the numbers are in percentages.

Looking at the numbers, the tax story isn’t a clear explanation. Cash flow thin companies are not all firing engineering like Uber, Doordash, and Roblox. In fact, big tech companies and companies that are enormously profitable are firing non-engineering talent. If the tax benefit from expensing R&D is the main driver, why aren’t big tech companies trying to reap that benefit?

A better interpretation is that the biggest standouts for engineering cuts are the ones where the core operations are deeply unprofitable. A lot of big tech companies appear to be cutting non-engineering roles like middle management, recruiting, and some projects that are not profit centers.

In other words, the cuts are more a function of core operations. Companies that have unprofitable operations are more likely to fire engineering staff. Companies that have profitable operations are more likely to fire non-engineering staff.

Hopefully I’ve done enough to convince you that a tax change is not sufficient enough to explain cuts across every industry, especially when many companies across many different industries do it and cite macroeconomic conditions and a better explanation exists.

So then why? Why ramp up the firings now? And if you’re profitable, why bother firing? Certainly everyone is screaming macroeconomic conditions. But I will try answer this later on.

Hunger Games

Why is it so damn hard to get engineering jobs? Sure a slowdown in hiring is nothing new but with not a lot of engineers being laid off, there shouldn’t be a lot of competition.

Some people cling to the hope that the returns of the Mag7 tech companies are a sign that companies will return to the frothy 2019 or 2021 hiring seasons. After such a brutal round of layoffs between the pandemic and 2022, surely a reversion is on the horizon. They cite the increased number of openings and

This is incorrect. Let’s try and confirm the overall trends in the job market a few ways.

First, let’s check the Hacker News “Who is Hiring” over time and “Who wants to be hired?” threads. Hacker News generally attracts career engineers so we can generally exclude junior engineer entries. We will use the hiring threads as a proxy for openings and posts for people who are seeking.

You can see that starting this year there are more job seekers than job openings.

Beginning in 2023, the number of openings has begun to outstrip the number of job seekers and that the number of openings has gone down. The spread has begun to widen at levels well past the 2020 pandemic lows.

Another data point is the trend of job posting in the UK. Granted it's not an apples to apples comparison but this should give you a sense of how much more difficult finding a job has been compared to years past.

Overall, 2023 has absolutely been abysmal for everyone. The number of openings have been extremely volatile. Much more than years past at depressed levels that are well below pre-pandemic and at 2016 levels. This could mean that fewer people are applying, job postings are taking longer to fill, or there are not a lot of jobs relative to other sectors. It’s not 100% conclusive but it’s not great news either.

Notice the uptick in contractors as well. This will be important later on.

If you were hoping the new grad/junior(L3) level wasn’t so bad, I don’t have great news for you. The number of opening has been absolutely decimated. While the difficulty of getting a L3 job has always been hard, there have always been companies willing to hire L3 engineers, invest in them, and grow their potential. Several brag reddit posts pre-2020 have famously shown anyone can just get a job at big tech. Perhaps its because I have ads blocked but I don’t hear anyone peddling their tech courses right now.

But if you actually look into the distribution of job openings by level, you can see that the market is utterly bifurcated along the midlevel line with a huge skew right. This means that there are fewer junior engineer slots available.

This seems to verify some of the earlier data that the overall amount of job openings has been completely destroyed as well. Unfortunately I could not find the data to find the number of openings before the pandemic outside of the UK data. That has shown a 66% drop in junior level openings. Prorating this distribution with the UK data, we can estimate that perhaps 26% of all job openings were at the junior level, 53% at the mid level, and 20% at the senior level before the pandemic.

But even if you assume the distribution hasn’t changed before the pandemic, this is already an extremely brutal situation. A decreasing job market with less than 15% of its openings available for junior engineers.

What hasn’t been noticed is that the problems in the drop in junior job openings is made worse because the amount of competition has dramatically increased. The number of computer science graduates has increased by 50% since I graduated in 2016. This means more and more people are competing for fewer and fewer slots. The difficulty and competition is immense.

A 50% increase in participants for a severe decrease in openings is akin to trying to exit a highway during morning normal hours versus rush hour in Florida. But in this case, there is a shrinking exit and more people running for it.

This is a monster of the industry’s own making. Because of the push for STEM programs and graduates and the promise of easy money and a lucrative career, more and more engineers have graduated at all time high levels. However, keep in mind that the degrees conferred reflect a 4 year delay in the initiatives because it takes 4 years to complete college. This also explains why we see a spike of computer science graduates 4 years after the 2001 dot com bubble burst.

The Implications: Git Gud or Git Replaced

Now that I’ve presented the facts, let’s talk about what both these peculiarities mean. This part is my speculation but the information in here is worth noting.

With respect to layoffs, we are just seeing a continuation of a trend in the tech industry that has quietly gone unnoticed. Even before the pandemic, companies have slowly replaced their full time employees with contractors in an effort to cut costs. It is to the point where places like Google are reportedly 50% composed of contractors (note that Google claims that it only uses .5% - 3%). By using contractors, companies don’t have to pay benefits or manage them with the same overhead as full time employees, thereby cutting costs.

One of my clients who got a Google offer was one of those contractors at Meta. He was in a state of purgatory for a year while Meta wasn’t sure if they were going to renew his contract despite repeated promises. You can imagine what a nightmare this is for truly hard working engineers who want to work hard at a salaried job.

But for the companies, they have also learned their lesson about overhiring and realized that they don’t need as many employees as they think. Zuckerberg famously said that Meta would have a year of efficiency where he would retain the cuts from 2022 and make further cuts in middle management. There is no longer a need to have managers manage managers who manage only a few non-impactful expensive engineers in hopes of needing them for a future project.

This also explains the huge layoffs. In profitable companies, a lot of these cuts are around recruiting and if you consider that companies are looking to replace people with contractors and temporary workers, it makes sense. In the companies with bad core operations, they could easily be looking to do the same and cut costs wherever they can to stay afloat.

With the era of free money at 0% interest rate over and slowing user growth, the opportunity cost to build and ship these products has increased. There is no longer a need to blindly throw engineers at a product to get meager gains. Furthermore, since the engineering codebase is built out with mature products, there is no need to grow new teams or projects. The L3 positions that were commonly hired to fix bugs and small projects and grow talent from the ground up have been eliminated. The opportunities to make a new impact are gone.

This is before you consider the rise of AI in the past year that will most likely make these opportunity losses permanent, especially since AI is pretty good at creating simple code very quickly.

You can begin why companies would double down on replacing full time employees with contractors and hiring developers in cheaper countries. With no need for weak engineers to build random products to grow revenues, only thoughtful and capable engineers are needed. You must prove that you are the cream of the crop. Or else, why wouldn’t they replace you with a contractor or someone outside the US? Why bother paying the salary of 2 or 3 experienced engineers in other countries when the work needed doesn’t require the skill premium?

Git gud or get replaced. There are no more handouts.

Recall that in an earlier letter that I said that there was less of a need for product and more of a need for infrastructure and that product can be written by anyone without thinking.

“Again, you don’t need geniuses. You need geniuses for the infrastructure and code monkeys to drive the products.”

“The most efficient course of action for companies is to cut costs and create 2 tracks of salaries: the product engineers and the infrastructure engineers…You don’t need to pay a code monkey the salary of a thoughtful competent hard worker.”

“Hard times flushes out the frauds and I don’t see us coming back to a tech mania any time in the future.

If anything, this means that only the hardcore will survive. The strong. The people who truly understand their skill and craft.”

I recommend you re-read my thoughts. Not to applaud myself: nothing about this development makes me happy. It is genuinely concerning and potentially very dangerous.

First, this is especially brutal to the new grad engineers. Not just that, the competition is even more brutal than previously stated. In the last section, I was only referring to new grad positions and new grads competing against more and more new grads for a diminishing number of spots. But in light of how companies are considering their future hiring, you should also consider that new grads and junior engineers are also competing against the lower half of L4 engineers. Almost everyone in the industry that has never worked on a codebase at scale are automatically labeled L4’s.

This is why the hiring bar is so high. The questions have not changed but the standards by which the answers are judged have. There are just too many people gunning for the same spot so companies can afford to raise the bar and pick the best of the best. Or at least the most economically sensible.

My belief is that junior engineers will have to spend their first few years in a sort of occupational purgatory and accept a lower than expected salary than previous generations. They will be competing against other junior engineers, some midlevel engineers, contractors around the world, and even full time engineers in other countries. You can also add AI to this list in the near future.

Extreme competition is terribly degenerate. But it may be unavoidable. I have no solution for this.

Second, this is a national security issue. This shift represents an investment in brain power outside of the US that will stay outside the US. The US already relies on a lot of talented workers who come from other countries, including and especially China. However, the hostile social environment since the pandemic has made several promising Chinese rethink their future in the US. This was not unlike the increased hesitation of Indian students to study in the US. As a result, you end up with incidents where an ex-Google AI employee stole several sensitive AI documents over the course of 2 years to potentially be used by startups in their home country.

This should send a clear signal to companies: attract and retain your talent, no matter who or where they are from because losing them is potentially very dangerous. Investing in outside talent for cheap is a shortcut to easy profits but increases the risk of long term damage from IP theft. I don’t think Meta would like it very much if their AI technology was in the wrong hands either. Yet by increasing the use of contractors and high turnover employees for more sensitive projects, this risk will increase.

This leads me to the third reason: the future of engineering in the US. Hiring people outside the US to do grunt work is nothing new. Human software testers are already outsourced at a lot of companies. Where I draw the line is when people mistakenly assume that, over the long run, continuing to outsource work is sustainable. As a former contractor myself, I can tell you that there is very little care in whether or not this code lives or dies because there is no guarantee of a contract extension. Therefore, as the code is iterated on, the sustainability of the codebase decreases dramatically. A small to medium sized codebase may be able to turn over every 2-3 years and this may be capital efficient.

But this is very foolish. Proper software architecture requires a staff of engineers that are focused on the long term success of the software. Just because you pass along 100% of how something works doesn’t mean you’ve covered everything. You also need to know how the software doesn’t work and what is likely to break. It is this knowledge of via negativa that is the difference between knowledge and wisdom.

Without the next generation, who will be able to carry that torch? Who will have that wisdom?

Admittedly, I have much less sympathy for individuals with established careers who are getting culled and don’t have the skills to continue because they have had an entire bull market to run their careers properly instead of coasting. A transition to management seems unlikely.

At any rate, this trend is not great and I do not have any good solutions to this. With the question of replacement on the table, we should now turn ourselves to the hot topic of the day: AI.

AI: Are We There Yet?

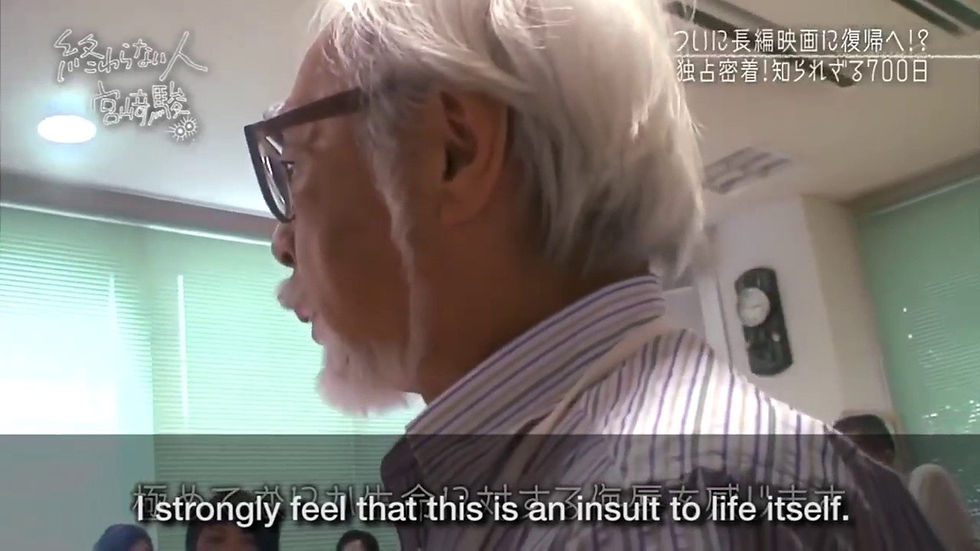

You may have noticed the colorful description at the top of this address by Hayato Miyazaki, the director of the critically acclaimed film studio Studio Gibli. In the video, he is presented with a sample of an AI generated human-like zombie creature.

Perhaps one of the biggest issues with technology evangelist is their inability to actually understand how people actually use technology. In effect, they are simply salesmen who have never even used their own products or seen them used but instead are pushing them onto others.

Much has been said about AI: how it can automate work and how it will do away with a lot of software engineers positions.

But ask an AI evangelist where AI is not useful. That should tell you how irrational the hype around AI is. Certainly a car enthusiast could tell you the limitations of a Lambo. Why should AI be any different?

We are still in the early stages where everyone is speculating on its use. It is indeed useful but nobody really understands how it works or what it actually will be used for. It’s pure speculation.

In fact, some people erroneously believe that it is the hype around AI that has caused many of the data science and engineering positions to be culled. That AI has advanced so much and will continue to advance to the point where people are no longer needed.

This is not quite correct. AI is not nearly good enough to replace engineers yet. It certainly saves time by almost always correctly generating Bash code which has always been a headache to produce. It is also great in summarizing long ERDs or meeting notes. But beyond simple code and natural language processing, its almost worthless.

I should know: I’ve been using Github copilot and my copilot appears to be a perpetual drunk. It can’t even generate code without me handholding it with tedious comments and hits which take me as much time to write as if I had written the actual code myself. I would be horrified if a machine with the speech capacity of an inebriated alcoholic is given the same responsibility and power as Sam Altman.

Because of the way models like ChatGPT are generated, they are effectively mimicking human speech, not constructing speech from abstraction and ideas. The model is trained on bodies of text and based on a multitude of parameters, speculates that when given a string of words, what word or series of words should come next. In the most oversimplified version, it is not unlike using Google autocomplete repeatedly.

To put that in perspective, that would be me reciting words from a book rather than talking about the underlying ideas. Copying words and sentences that mimic human speech without understanding of grammar just because past words seem to be strung together in a particular way..

Some results are undoubtedly useful. Others are just plain wrong. Code and standards change frequently and without a substantial body of data to train on, AI will not keep up. Just ask Google how frequently it has to deprecate old Android APIs. It would be very annoying for AI to continually suggest the deprecated AsyncTask or use a Java library instead of the industry standard OkHTTP for HTTP calls. Nevermind the fact that Android has been Kotlin first for a few years now.

You can begin to see where the limitations are, to say nothing of the limitations of the ability to write Bash that works in Linux vs. Mac. Despite the fact that Bash has existed for decades, AI still has difficulty distinguishing between Bash commands/functionality that only work in Linux vs. Mac.

Beyond the ever changing fast paced nature of tech, there are also a few other issues. AI is great at handling the easy simple tasks quickly but fails when it comes to actually thinking about more complex tasks. This is where I begin my argument: AI will never fully replace properly thinking humans.

The first reason is that changes need to be properly managed. I don’t mean this in a productivity workflow manner. A codebase is a complex adaptive system (CAS). Each individual module is doing an independent piece of work that it is fed by the output of another module. The modules may not know that the other one exists. Yet the behavior together produces something completely different. This is what is called “emergent behavior”: a behavior of a collective that only exists because of several independent factors working together.

Consider what happened when wolves were overhunted in Yellowstone. Wolves were killed as part of game, as part of population control, and to prevent them from hurting humans. However, this caused a series of unintended consequences:Coyotes ran rampant, and the elk population exploded, overgrazing willows and aspens. Without those trees, songbirds began to decline, beavers could no longer build their dams and riverbanks started to erode. Without beaver dams and the shade from trees and other plants, water temperatures were too high for cold-water fish.

One change in one part of the ecosystem caused catastrophic cascading changes everywhere else. The worst part is that nobody could have predicted this and while in hindsight it is easy to say this should have been predicted, its only after the lines are drawn do we see what should have been seen.

Can AI handle this? Most definitely not. It takes a thinking human to manage risk and reward to figure out the best implementation. And even then a human can’t predict all these cascading effects. In order to predict this, you’d have to make a prediction based on another prediction based on an assumption that may or may not be true.

In other words, at some level, predictions become no better than rolling a dice. Then again, I should heed this as well when trying to judge the future of the industry.

Second, AI in its current state is more suited to idea generation but still needs a human to verify if the idea is right. AI generates essentially word soup that resembles human language that somehow makes functioning code. If given full control, how would you verify that it's going to do what it says it does? If you have spent your life just blindly trusting AI and relying on it, how will you have the skill to step in to intervene?

Using code that you do not understand is so damn dangerous and it is something every junior engineer learns within the first 6 months on the job. Blindly copy-pasting stack overflow into production or repeating code in a code base isn’t just a violation of DRY principle (do not repeat yourself), its also massively stupid because you have no idea what the limitations of the code you have just copied are.

Don’t take that from me. Linus Torvalds, the Linux maintainer, flamed a kernel contributor with the exact same take when he copy-pasted the same code.

Not knowing how an idea or code may kill you is massively stupid and leads to very painful 3am debugging sessions as the on-call.

This leads me to my third big reason why AI is not ready to take over all human jobs. Because a machine cannot take responsibility, it cannot lead. Or rather, it should not. Given how many humans shirk responsibility when the negative consequences of their actions, intended or unintended, come along, this is nothing new. But imagine an AI being given trust and power without accountability or responsibility.

That sounds not only dangerous but insane. Name one organization in the world that would blindly hand over the reins of power to such an irresponsible entity.

There is a big reason why we do not intentionally trust grifters and frauds to manage and lead people. At least not for a long time.

In the future, all of these 3 reasons may no longer be valid. But I highly doubted. The reasons I listed are very robust and will stand the test of time, no matter how advanced AI gets. AI may be able to overcome limitations but it will never earn the trust of the humans that construct it. Humans trust other humans and their pets because they can be controlled to a degree. But no human ever trusts another entity that it cannot control, understand, or believe will kill them.

Except cats. Because cats are cute and harmless.

So where is AI most useful? AI in its current state is more suited as an assistant to humans and to correct the most common and stupid errors. Common errors are well documented and exist in spades, often with the same answer repeated. The probability AI would spit out random gibberish when given a data set that contains several variations of the exact same code is much lower than trying to born an idea that has never been seen before.

AI will not replace human thinking until an AI is able to replace, explain, and outperform human intuition. Humans have evolved over hundreds of thousands of years to intuit dangers and risk before we are able to rationally understand why we feel that way. Those unconscious decisions and gut feelings drive our behavior before we are even aware of them and may not always be accurate but are biased towards survival. AI is biased towards the data it is fed and the patterns that it sees. Even something as basic as n-grams for generative AI is just a way to create patterns on this.

That being said, this is also why AI will take over a good number of human functions. A person whose job consists of mostly copy-pasting code will quickly be replaced by someone else using AI. In a mature code base, most code is standardized and most problems are already solved and known. It just takes finding the right lines. Since AI becomes more precise (although not necessarily accurate) the more consistent the data it is trained on, the more likely it will spit out the right answer. Why should someone who has no engineering muscle, no ability to problem solve, be paid big bucks to ship a small product every quarter when it is cheaper to have an AI generate the exact same code and have another engineer just spend a week to verify the functionality?

I have warned many times that if all you can do is be a code monkey, you will get replaced. AI is just another entity that will replace you if that’s all you can do: the bare minimum. Beyond that, I don’t know what the future will hold. Give it some time.

Getting Crowded

This leads me to the crux of my letter: a crisis of crowding.

There is no doubt that engineers are needed in today’s software driven world. The problem is that there are too many software engineers competing for fewer and fewer spots.

A good way to think about this is that over the past 10 years, there has been a “growth” of need for engineers. Global scaling companies unleashed by cheap interest rate loans like Uber, Snapchat, Facebook, Airbnb, and so on have gone on a hiring spree to build out products that rely on the network effect and build the infrastructure to support it. Make no mistake. It is thanks to Google’s intellectual legacy and prowess that has been shared to Facebook then to other companies that this advance has happened. How to scale and what scale looks like has been copied and replicated, allowing other companies the technical ability to achieve the same level of scale.

The playbook has been to grow and capture more users with more products and offerings. In order to do this, a company needs to build and scale the infrastructure to handle that new growth, whether it is the developers writing the code or the users using the systems. Sending an army of developers at the problem because the cheap cost of capital was a no brainer.

But one of the beauties of scale is its efficiency. More and more complex and efficient software can serve more and more people with a decreasing marginal rate of developers. For 1 million users, you may need 20 developers. For 10 million users, you may only need 40 developers.

With the end of low interest rate loans and the slowing of growth, there is less of a need for the industry to hire the average engineer to build cookie-cutter products to capture new users. Furthermore, there are not many new industries left to “democratize” that can predictably be profitable.

The industry now has focused on consolidation, stabilizing, and making its operations more efficient. The layoffs, “year of efficiency”, and push for AI is a signal of this. There is no longer a need to hire an army of average engineers. Just a need to hire smarter ones that can create innovations that eliminate the need for average engineers.

Yet this delay between what the industry needs versus what people think it wants has not caught up to people. I don’t think most people actually understand this because they are simply looking at the need to share information and how to get hired, not the motivations of hiring.

It will be some time before the industry wakes up to this. As an industry, engineering isn’t ready yet to be explicit about gatekeeping. We’ve spent the last 10 years promoting coding and tech as a way to a better life and pushed initiatives to get more people into it. As more and more people get into engineering, the number of opportunities are shrinking and the promises of great rewards may not be fulfilled. In fact, the rewards may be cannibalized.

In closing, I think that the people who are just starting out in their careers for software engineering have a rich pool of engineers to learn from but have an even bigger pool of lousy engineers to be led astray by. They will have a much harder time trying to figure out what will work in the long run and what won’t. They will have more brutal competition to get fewer jobs.

Perhaps as the world has gotten more competitive, the less reward there is to go around. The quality and what is expected for the same job has gone up. This is also true in investing. As Charlie Munger put it.

“I think value investors are going to have a harder time now that there's so many of them competing for a diminished bunch of opportunities. So my advice to value investors is to get used to making less,"

Going forward, I expect that the people of future generations will be much less rewarded financially for their efforts than my generation. Much of the cracker barrel “wisdom” that has been accumulated over the past decade will become either the baseline norm and provide no advantages or become obsolete altogether.

Don’t get me wrong. There will be opportunities in the future, whether that is in new technology that the old generation can’t keep up with our new businesses. Heck, I even see pockets of opportunities in the industry that need to be solved, especially around developer productivity. Innovation won’t just end out of nowhere. But where and when it will come is not clear. It took decades to go from the advent of the internet to the iPhone. And with smart phones, it enabled social media and mobile utilities like Uber to become massively successful.

Unless you’re very skilled, I just don’t think that the world will be as generous in the next few years as it has been to people in the last decade.

I am actually on the receiving end of it, I have 1 year of experience outside of US as a software dev and here in US after graduating with MS in software Engineering in may 2024. I am still looking for early career positions so definitely what you said about the crowding issue is totally experienced first hand. I am currently on F1 OPT and to stop my unemployment clock and buy myself some time I started working for a non-profit. I recently came across your google document of "welcome to coding interviews "you suck"" and what a eye opener that was for me and on my way to git good now. I know I don't have much time but I…